Search engines can only rank what they can reach. Technical SEO makes sure they can. It’s the part of optimization that focuses on how your website works under the hood, like how fast it loads, how it’s structured, and how easily crawlers and people can move through it.

Think of it as building solid infrastructure. The content may win the audience, but technical SEO makes sure the stage doesn’t collapse mid-performance. When the setup is clean, search engines can index your pages properly, and visitors get a site that feels effortless to use.

This guide walks through how to get those fundamentals right like crawlability, speed, structured data, and more, so your site performs well and keeps doing so over time.

How Search Engines Crawl, Render, and Index Your Website

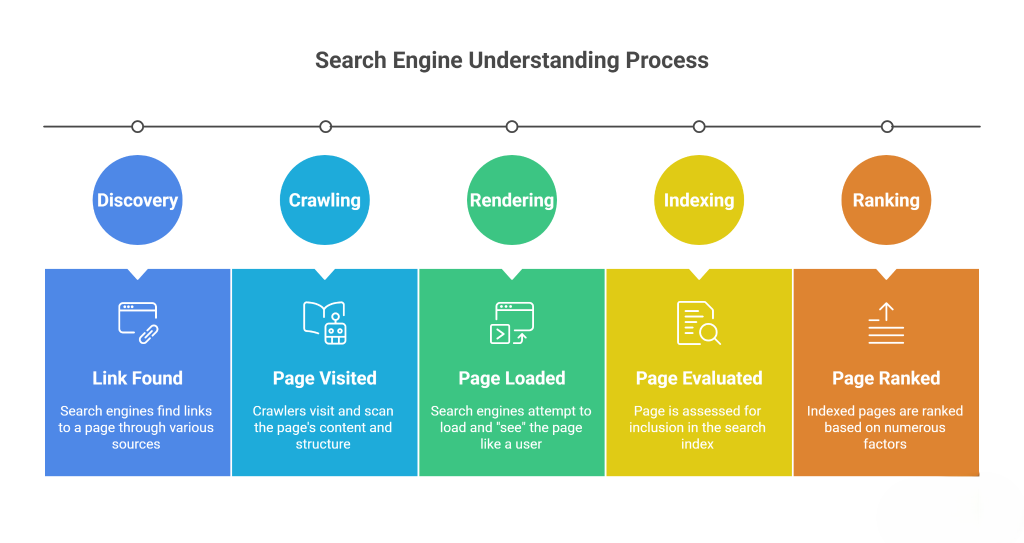

Before a page can rank, it has to be found, read, and understood. Search engines follow a straightforward path to make that happen: discovery, crawling, rendering, indexing, and finally ranking. Knowing what happens at each stage helps you spot where things can go wrong.

Discovery and crawling

Search engines discover pages through links, sitemaps, and external mentions. Once a page is found, crawlers follow links across your site to map its structure. Good internal linking and an updated XML sitemap make that job easy. A clear robots.txt file tells crawlers what to skip so they don’t waste time on admin pages or duplicates.

Rendering

After crawling, the search engine tries to load your page the way a visitor would. If key content depends on JavaScript that loads slowly or inconsistently, it might never be seen. Keeping essential text and links in HTML, or using server-side rendering, makes your pages more reliable to process.

Indexing

Once a page is rendered, the system decides whether to keep it in the index. Pages blocked by noindex tags, duplicates, or redirects are skipped. Canonical tags help confirm which version of similar pages should stay visible, and is intended to address duplicate pages.

Ranking

Only indexed pages can rank. From there, technical factors like speed, mobile performance, and page stability influence how your content competes in results. Technical SEO doesn’t win rankings on its own, but clears the path so everything else can work.

Running periodic checks in PageSpeed Insights, Google Search Console, or a crawler like Screaming Frog shows where that path might be blocked. Keeping it clear is what lets search engines understand your site the same way a visitor does, without obstacles.

How to Build an SEO-Friendly Site Structure That Boosts Crawlability

A good site structure makes it easy for both people and search engines to find things. When pages are organized logically, crawlers can move through the site without missing anything, and visitors always know where they are. Five ways to build a solid site structure:

- Keep it simple: Every important page should be reachable within a few clicks from the homepage. Group related pages together and link them in ways that make sense. Avoid “orphan pages” — URLs that nothing links to — because they’re invisible to crawlers.

- Make URLs clear and consistent: Short, descriptive URLs help everyone understand what a page is about. Use lowercase letters, hyphens instead of underscores, and avoid random parameters. A clean path like /technical-seo/basics is better than /page?id=1234.

- Link internally with purpose: Internal links share authority and help search engines understand how your content fits together. Use clear, descriptive anchor text and connect pages that naturally relate to each other. This improves navigation and helps crawlers follow the flow of your site.

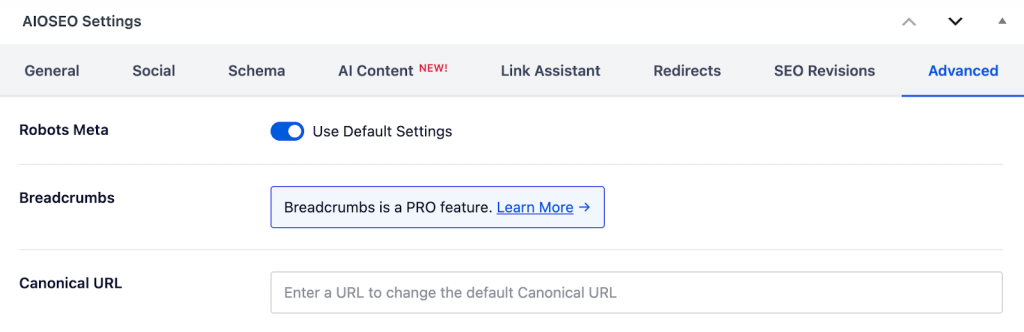

- Add breadcrumbs: Breadcrumb navigation gives users a clear path back to higher-level pages and adds structure that search engines can display in results. It’s simple to set up and makes large sites easier to explore.

- Mind your crawl budget: Search engines only crawl a limited number of pages at a time. Keep that crawl budget focused by avoiding endless parameter URLs, outdated content, or unnecessary redirects.

A well-planned structure keeps your site lightweight, organized, and discoverable. This foundation helps every other part of SEO perform better.

How to Use Sitemaps, Robots.txt, and Indexing Signals for Better SEO

Search engines don’t magically know which parts of your site matter most. You have to guide them, and that’s where a few quiet but crucial tools come in: your sitemap, robots.txt file, and indexing signals. Together, they tell crawlers what to explore, what to skip, and which version of a page to trust.

Start with your XML sitemap. It’s basically a directory of the pages you want indexed, a way to make sure search engines can find your key content even if some pages sit deeper in the structure. Keep it clean: no duplicates, broken URLs, and pages blocked by robots.txt. Update it when you publish new content or remove old ones, and resubmit it in Google Search Console when big changes happen. You can generate a sitemap with tools like Octopus or plugins like AIOSEO.

Then there’s robots.txt, the file that acts like a bouncer for your website. It tells crawlers which areas they can and can’t access, and you can see what yours looks like by appending /robots.txt after your main URL (e.g., columncontent.com/robots.txt). Blocking admin panels, test directories, or certain scripts is fine; blocking your whole content folder by accident is not. Always double-check your rules, especially after redesigns or CMS updates.

When it comes to duplicate pages, canonical tags are your best signal. They point search engines toward the preferred version of similar content and prevent diluted ranking power. If your site runs on both HTTP and HTTPS or has tracking parameters in URLs, canonicalization keeps things clean and consistent.

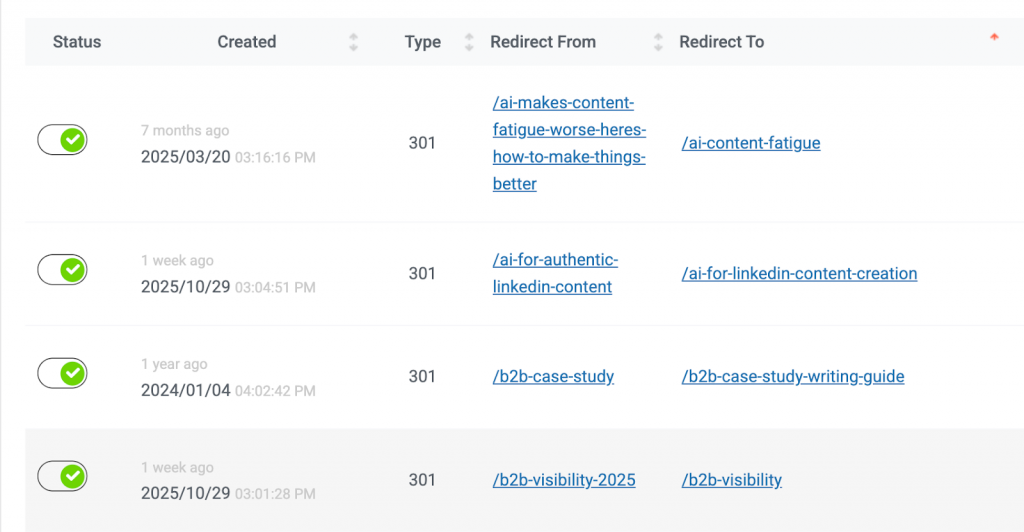

And don’t forget the quiet housekeeping — proper 301 redirects for moved pages, helpful custom 404s for removed ones, and selective use of noindex tags for pages that shouldn’t appear in results. Each of these small signals adds up to a site that communicates clearly with search engines.

In short, you’re not just telling crawlers where to go, you’re teaching them how to see your site the way you intend. That clarity is what keeps your pages visible, organized, and easy to trust.

How to Improve Site Speed and Core Web Vitals for Higher Rankings

Speed isn’t just about convenience, it’s one of the clearest signals that a site is healthy. When pages load slowly or shift around while they load, users leave, and search engines notice. Performance is the first impression your site makes, long before anyone reads a word.

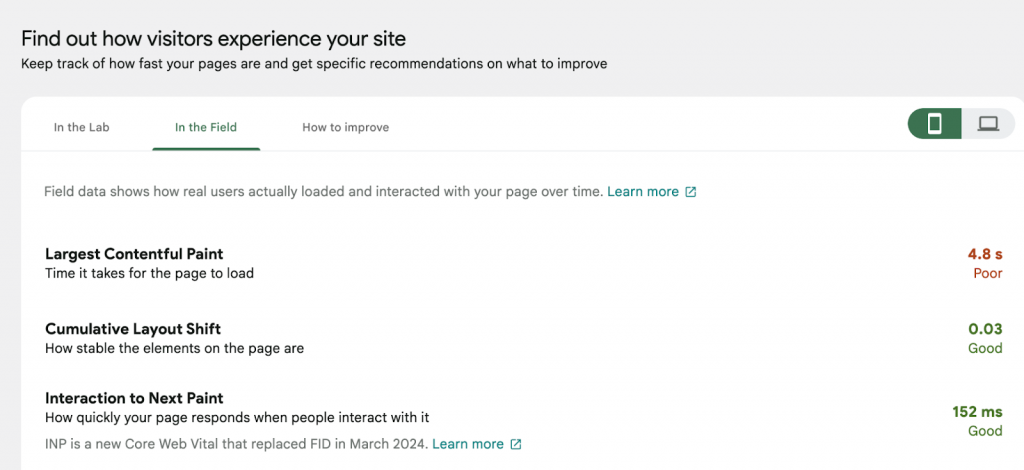

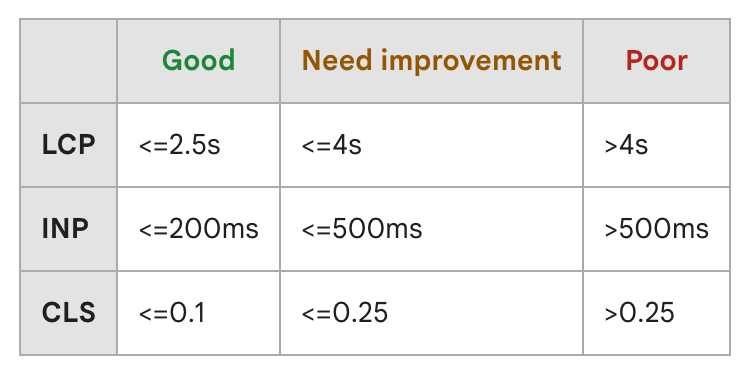

Google’s Core Web Vitals are the best way to measure that experience. They focus on three things:

- How fast the main content appears (Largest Contentful Paint, or LCP)

- How quickly a page responds when someone interacts (Interaction to Next Paint, or INP)

- How stable the layout stays as it loads (Cumulative Layout Shift, or CLS).

The sweet spots are simple: LCP under 2.5 seconds, INP under 200 milliseconds, and CLS below 0.1.

If you want to improve those scores, start with the basics:

- Compress images and serve them in modern formats like WebP.

- Minify CSS and JavaScript to remove unnecessary code.

- Enable caching and use a content delivery network (CDN) for faster delivery.

- Upgrade to HTTP/2 (or newer) if your host supports it.

- Prioritize critical assets and defer anything that isn’t needed right away.

You can test and monitor all of this easily with tools like PageSpeed Insights, Lighthouse, or GTmetrix.

Good performance isn’t just a technical win, it’s an act of respect. Fast pages show that you value people’s time. They make your site feel lighter, smoother, and more professional. And over time, those gains stack up: better rankings, lower bounce rates, and visitors who actually stay long enough to read what you worked so hard to write.

How to Create a Mobile-First and Accessible Website for SEO Success

Most searches happen on mobile devices now, so search engines judge your site primarily by how it performs there. A site that looks polished on desktop but clunky or incomplete on mobile is, to Google, a broken site.

Mobile-first indexing means Google crawls and evaluates your mobile version before anything else. If important content or metadata doesn’t appear on mobile, it may be invisible. Keep both versions consistent: the same headings, the same structured data, and the same internal links.

Design-wise, mobile friendliness isn’t complicated, but it’s easy to overlook. Text should be large enough to read without zooming, buttons should have breathing room, and pop-ups shouldn’t cover the entire screen. Navigation needs to be straightforward, a few clear options beat a maze of nested menus.

You’ll run these tests as part of normal web development, and page builders like Elementor make this easy.

Here’s a quick pulse check for mobile usability:

- Responsive layout: the page adjusts naturally to any screen size.

- Fast loading: optimize for small screens and slower connections.

- Stable content: define image and ad sizes to prevent layout shifts.

- Touch-friendly design: large tap targets, generous spacing.

Accessibility overlaps with this work. Alt text, clear color contrast, and logical heading structures make your content usable for more people, and easier for crawlers to interpret.

How HTTPS and Site Security Improve SEO and User Trust

Security is one of those technical details people only notice when it’s missing. For search engines, though, it’s non-negotiable. A secure site protects visitors, builds trust, and sends a clear quality signal that your website is safe to browse.

The first step is simple: move everything to HTTPS. Use a valid SSL certificate, and make sure every internal link, image, and script points to the secure version. Redirect old HTTP URLs with 301s and update your sitemap and canonical tags accordingly. If any mixed-content warnings appear, fix them right away as they undermine both user confidence and search performance.

Beyond encryption, good site integrity comes down to maintenance. Outdated software and forgotten plugins are open doors for attackers. Keep your CMS, themes, and plugins up to date, and remove anything you no longer use.

A short checklist helps keep things tight:

- Run regular malware and security scans.

- Check for broken links and redirect loops.

- Back up your site before major updates.

- Monitor server uptime and error logs.

- Review Search Console for any security alerts.

A site that loads securely, runs smoothly, and stays online consistently earns more than rankings, it earns trust. That reliability builds a reputation both users and search engines remember.

How to Use Structured Data and Schema Markup to Improve Search Visibility

Search engines are smart, but they still need context. Structured data gives them that context by labeling what’s on a page, whether it’s a product, a recipe, a review, or an article. When search engines understand your content clearly, they can display it in richer, more useful ways.

Structured data uses schema markup, a standardized vocabulary that describes content directly in your HTML. It doesn’t change what users see; it simply helps crawlers read it better. Most sites use JSON-LD format because it’s cleaner and easier to maintain.

Start with what matters most. Common schema types include:

- Organization or LocalBusiness: adds name, address, and contact info.

- Article or BlogPosting: highlights headline, date, and author details.

- Product or Review: shows ratings, prices, and availability.

- FAQPage or HowTo: can trigger interactive snippets directly in results.

Once markup is added, always test it using the Rich Results Test or the Schema.org validator. Small errors can break eligibility for rich results, and fixing them early saves a lot of confusion later.

When done right, structured data improves visibility, click-through rate, and the way your content appears in search.

How to Manage Duplicate and Multilingual Content for Global SEO

Duplicate or regional versions of a page can easily confuse search engines. Without clear signals, they don’t know which version to prioritize, and that can split your visibility across multiple URLs. Managing these variations cleanly keeps your site focused and easier to rank.

Start with canonical tags. They tell search engines which version of a page is the “main” one when several URLs show the same or similar content. This is especially useful for sites that use tracking parameters, product filters, or different device URLs. Each canonical tag should either point to the preferred page or reference itself if it’s the original.

Next, keep your domain versions consistent. Pick one format, either with or without “www”, and stick to it. Redirect everything else to that version with 301s. The same rule applies to protocol: once you move to HTTPS, make sure no HTTP pages remain accessible.

If your site targets audiences in different languages or countries, use hreflang attributes. These tags tell search engines which language and region each page is meant for, preventing different versions from competing in the same search results. They also help users land on the version written for them.

Finally, make regular checks part of your workflow:

- Scan for duplicate title tags and meta descriptions.

- Review your canonical and hreflang setup after site updates.

- Remove or redirect outdated duplicates.

Managing content variations keeps your site clear and consistent. When search engines can see which version matters most, every signal you send reinforces the right pages and strengthens your overall visibility.

How to Optimize JavaScript Frameworks for SEO

Websites have become more dynamic, but search engines still rely on structure to make sense of them. Frameworks like React, Angular, and Vue can create rich, fast user experiences, yet they also introduce challenges for crawling and indexing if not handled carefully.

Search engines process pages in two stages: first they crawl the raw HTML, then they render the JavaScript. If your content only appears after the scripts load, it might never be indexed. To make sure nothing important gets lost, use one of these rendering strategies:

- Server-side rendering (SSR): The server delivers a fully rendered page to both users and crawlers.

- Pre-rendering: Static HTML versions of key pages are generated ahead of time.

- Dynamic rendering: Crawlers see a rendered version while users get the live, client-side experience.

Each option ensures that critical text, images, and links are visible in the page source, not hidden behind scripts.

JavaScript-heavy sites also tend to generate endless variations of the same URL through filters or parameters. Manage that crawl waste with canonical tags, noindex rules, and proper URL parameter settings in Google Search Console.

Finally, test how search engines actually see your pages. Use the “URL Inspection” tool in Search Console or a crawler with JavaScript rendering. Compare what’s in the HTML to what users see in the browser, if they don’t match, it’s time to adjust.

Best Technical SEO Tools and Audits to Maintain Site Performance

Technical SEO doesn’t end once a site is optimized. Code changes, new pages appear, plugins update, and suddenly something that worked perfectly starts slipping. Regular audits are how you catch those changes before they become problems.

Start with the essentials. Google Search Console is your control room. It shows which pages are indexed, which aren’t, and why. It also tracks Core Web Vitals and structured data issues so you can fix them early. Bing Webmaster Tools offers similar insight for Microsoft’s ecosystem.

For deeper crawling and diagnostics, tools like Screaming Frog, Sitebulb, and DeepCrawl can scan thousands of pages, flag broken links, identify redirect loops, and surface duplicate titles or meta tags. For performance tracking, PageSpeed Insights, Lighthouse, GTmetrix, and WebPageTest give measurable data on loading time and layout stability.

When you run SEO audits, focus on the areas that matter most:

- Crawlability and index coverage.

- Redirects and canonical consistency.

- Site speed and Core Web Vitals.

- Structured data accuracy.

- HTTPS and security status.

Don’t try to fix everything at once. Start with the issues that block crawling or affect users directly, those usually have the biggest impact. Then build a rhythm: monthly quick checks, quarterly deep audits, and a full review after any redesign.

Technical SEO is like site maintenance: most of the time, nothing’s visibly wrong, but when it is, it affects everything. Staying proactive keeps your site running cleanly and your SEO gains stable.

Great Content Only Ranks If Your Technical SEO Holds It Up

When a site runs smoothly, loads quickly, and stays secure, it sends a simple message: this is a place worth visiting. Those fundamentals don’t change with every update or algorithm tweak; they’re what give your content and links room to perform.

Keep your technical setup tidy, check it often, and treat it as ongoing maintenance rather than emergency repair. A site built on that kind of consistency won’t just rank, but lasts.

Column helps brands build SEO content strategies that align strong technical SEO foundations with clear, consistent messaging. Get in touch to strengthen your content performance from the ground up.

Johnson is a Content Strategist at Column. He helps brands craft content that drives visibility and results. He studied Economics at the University of Ibadan and brings over years of experience in direct response marketing, combining strategy, creativity, and data-backed thinking.

Connect with him on LinkedIn.